- Pro

Privacy essentials for safe and responsible chatbot use

When you purchase through links on our site, we may earn an affiliate commission. Here’s how it works.

(Image credit: AI)

Share

Share by:

(Image credit: AI)

Share

Share by:

- Copy link

- X

- Threads

Remember that 2 a.m. conversation you had with an AI chatbot about your allergies? Or the question you asked about your recent bank statements? What feels private in the moment is often anything but.

Recent reports have shown that Meta’s contractors, who are hired by the company to review AI interactions, sometimes see unredacted personal details such as health information, financial records and even identifying data, that most people assume are confidential.

You may like-

The search for transparency and reliability in the AI era

The search for transparency and reliability in the AI era

-

Six questions to ask when crafting an AI enablement plan

Six questions to ask when crafting an AI enablement plan

-

“Everybody's under pressure to do more with less” - Why Okta says you need an AI agent governance strategy, and sooner rather than later

“Everybody's under pressure to do more with less” - Why Okta says you need an AI agent governance strategy, and sooner rather than later

Founder and CEO of Redactable.

This is the not-so-public issue at the heart of today’s AI race. Chatbots are being deployed at scale in banking, healthcare, government and beyond. They are fast, convenient and increasingly capable of handling complex and sometimes sensitive queries. But convenience should never come before security. Every prompt, every transcript and every backend log carries data that can live much longer than users intended.

Before becoming a tech founder, I spent years surrounded by tax returns, Social Security numbers, inspection reports and account statements. Back then, “protection” meant drawing boxes over text and hoping it was safe. It wasn’t. That frustration became an opportunity when I realized the real solution was not hiding data, but permanently removing it.

Are you a pro? Subscribe to our newsletterContact me with news and offers from other Future brandsReceive email from us on behalf of our trusted partners or sponsorsBy submitting your information you agree to the Terms & Conditions and Privacy Policy and are aged 16 or over.Chatbots and AI systems are the newest arena where these issues come to light. If we want to protect privacy and build trust in this new era, we can’t rely on cosmetic fixes. Both businesses and users need to rethink what it means to communicate with AI. Here are five things to remember.

1. Chatbots do not forget

Most people chat with AI like it’s a private conversation, but those records often stick around far longer than we realize. That quick health question, that troubleshooting log, or that internal IT request may be stored, reviewed and even used to train future models. If the information is not sanitized, it can remain in those models indefinitely.

For businesses, this means retention policies must be explicit, not implied. If transcripts are kept for customer service quality, they should have clear expiration dates. If logs are used for training, sensitive details must be removed before they ever get in front of humans.

You may like-

The search for transparency and reliability in the AI era

The search for transparency and reliability in the AI era

-

Six questions to ask when crafting an AI enablement plan

Six questions to ask when crafting an AI enablement plan

-

“Everybody's under pressure to do more with less” - Why Okta says you need an AI agent governance strategy, and sooner rather than later

“Everybody's under pressure to do more with less” - Why Okta says you need an AI agent governance strategy, and sooner rather than later

Individual users are vulnerable too. For them, the safest assumption is that nothing shared with a chatbot is ever truly private. A parent might ask a virtual assistant to draft an email to a school about a child’s medication. A marathon runner might ask a wellness app for advice on treating a sprained ankle.

Each example sounds like a simple request, but every word can end up stored on servers for months or years. Not every number is sensitive, but account numbers, Social Security numbers and other identifiers are. Keep those for secure portals or phone calls.

2. Redaction is not optional

The most common misconception about data protection is that obscuring information is the same as eliminating it. In reality, hiding fields, masking inputs or blacking out text only covers the information on the screen. The underlying data often still exists in the file and can be easy to uncover.

This is particularly dangerous in chatbot conversations, because sensitive details may be embedded deep in transcripts or error logs. Businesses that rely on superficial fixes create an illusion of safety while exposing themselves to breaches and leaks.

True redaction is permanent removal. Without it, companies risk losing both compliance and customer trust.

3. Data minimization means removal

Some organizations pride themselves on collecting less data, which is a good start. But less data does not solve the problem if the data collected is being stored indefinitely.

Chatbot transcripts are a perfect example. Many are kept to retrain models, to analyze customer sentiment, or to resolve disputes. This means there’s a growing archive of sensitive information that becomes a liability with every passing day.

Regulators are beginning to respond, from state-level child data protection laws to international privacy frameworks. Businesses need to be proactive too. Companies that adopt permanent removal now will be positioned ahead of the inevitable compliance curve.

Consumers need to minimize too. An average user often overshares in chatbot conversations without realizing it. For instance, a customer trying to understand an internet bill might type out full account numbers, or a patient might upload an entire lab report instead of only a few required details.

Companies can help by encouraging users to minimize or sanitize data before uploading, so both sides share the responsibility of reducing exposure.

Before typing into a chatbot, it helps to pause and ask: is this something I would be comfortable keeping in a searchable log? If not, it isn’t worth the risk. Many messaging apps and browser extensions now allow users to clear history automatically. These tools are not a replacement for true redaction, but they reduce exposure.

4. Transparency builds user confidence

The average person is more likely to use AI systems if they know how their information is going to be handled. Hiding the mechanics of data retention creates suspicion and damages trust. Clear communication about what is stored, how long it is stored and whether sensitive details are redacted goes a long way.

For businesses, this is not just a matter of ethics but of competitive advantage. Customers will reward the companies that explain their practices in plain language and give users meaningful control. Transparency transforms privacy into a sought-after feature.

Another way to strengthen trust is for companies that build or use chatbots to actively suggest data sanitization before upload. Even a simple reminder can set the right expectation. Businesses that go the extra mile by helping customers educate themselves about data privacy gain a competitive advantage in today’s market.

5. Privacy is a competitive advantage

The assumption that users only care about speed is short-sighted. In reality, many people care just as much about how their data is handled. According to a recent Cisco survey, 75% of respondents would not buy from a company they do not trust with their data.

In an increasingly crowded market, privacy becomes a brand differentiator. The companies that treat it as a feature, not an afterthought, will stand out. Those that ignore it will risk regulatory action and lose customer loyalty. In business technology, trust compounds faster than novelty.

Companies that sanitize customer data and are upfront and transparent about it will stand out even more.

The path forward

AI chatbots and the foundational models behind them are not going away, nor should they. This technology offers real efficiency, accessibility and scale. But they will only thrive if both businesses and users recognize the risks that come with convenience and know how to secure themselves.

Just as I once learned that drawing boxes over sensitive data did not protect my clients, businesses today must learn that true privacy requires a thoughtful approach that is tailored to their organizations. Anything less is wishful thinking.

Most companies still respond to leaks after the damage is done. The real shift will come when we stop treating privacy as an emergency response and start designing systems that are privacy-conscious from the very beginning. That is how AI earns the right to handle our most sensitive conversations.

Check out our list of the best live chat software.

TOPICS AI Amanda LevaySocial Links NavigationFounder and CEO of Redactable.

Show More CommentsYou must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Logout Read more The search for transparency and reliability in the AI era

The search for transparency and reliability in the AI era

Six questions to ask when crafting an AI enablement plan

Six questions to ask when crafting an AI enablement plan

“Everybody's under pressure to do more with less” - Why Okta says you need an AI agent governance strategy, and sooner rather than later

“Everybody's under pressure to do more with less” - Why Okta says you need an AI agent governance strategy, and sooner rather than later

One-size-fits-all AI guardrails do not work in the enterprise

One-size-fits-all AI guardrails do not work in the enterprise

Why long-term memory is the missing layer for AI-driven experiences

Why long-term memory is the missing layer for AI-driven experiences

Top 10 AI chatbot do's and don’ts to help you get the most out of ChatGPT, Gemini, and more

Latest in Pro

Top 10 AI chatbot do's and don’ts to help you get the most out of ChatGPT, Gemini, and more

Latest in Pro

Salesmate CRM review 2026

Salesmate CRM review 2026

HoneyBook CRM review 2026

HoneyBook CRM review 2026

Agile CRM review 2026

Agile CRM review 2026

From SaaS to AI: the technological and cultural shifts leaders must confront

From SaaS to AI: the technological and cultural shifts leaders must confront

Auto giant LKQ says it's the latest firm to be hit by Oracle EBS data breach

Auto giant LKQ says it's the latest firm to be hit by Oracle EBS data breach

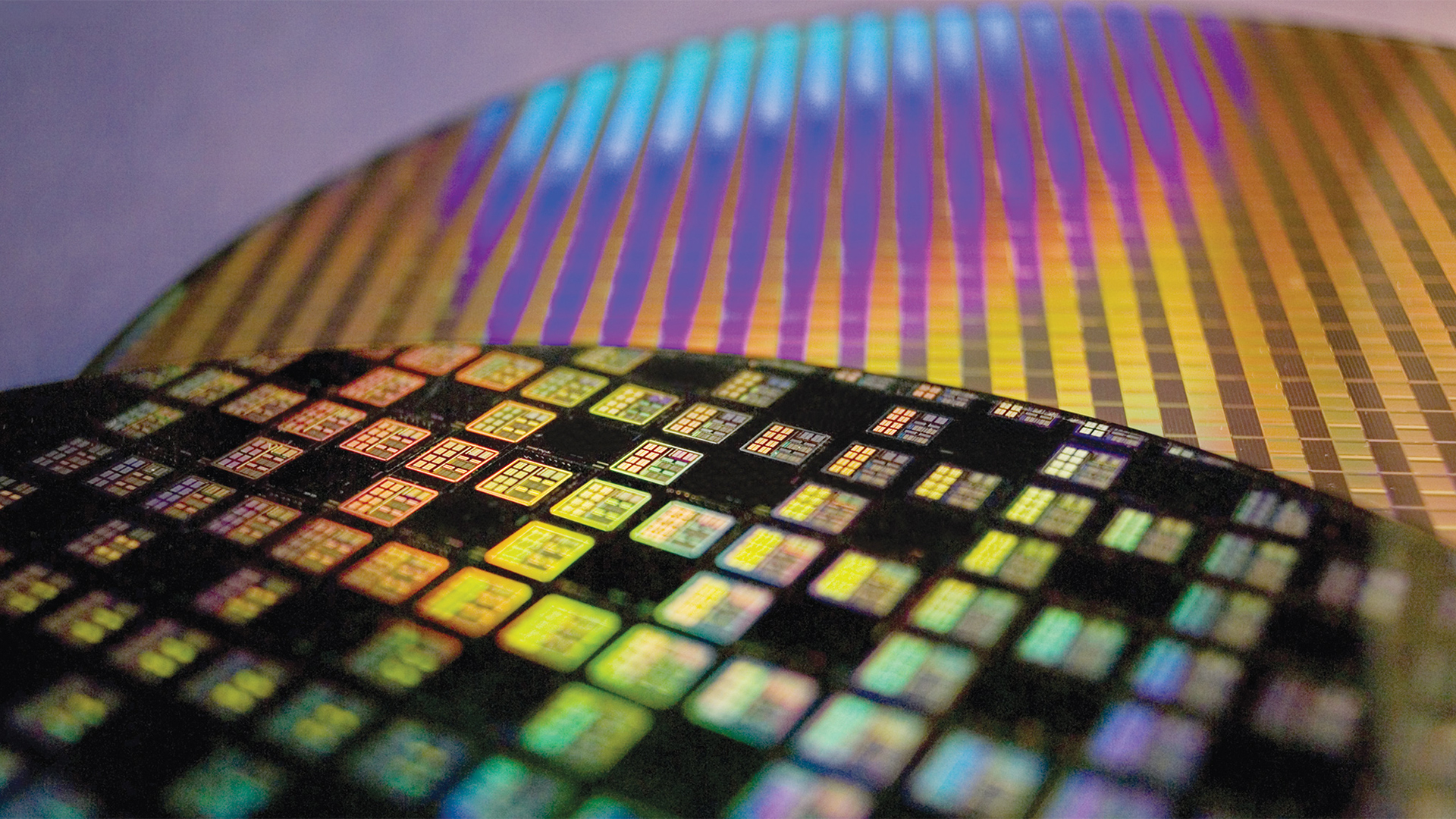

It's not just RAM getting more expensive - the tools to make chips are set to explode in cost too, experts warn

Latest in Opinion

It's not just RAM getting more expensive - the tools to make chips are set to explode in cost too, experts warn

Latest in Opinion

Vacuum cleaner features ranked from 'essential' to 'unnecessary', by a professional tester

Vacuum cleaner features ranked from 'essential' to 'unnecessary', by a professional tester

AI blindness is costing your business: how to build trust in the data powering AI

AI blindness is costing your business: how to build trust in the data powering AI

Holidays 2025: retailers face a perfect storm of traffic, threats, and customer pressure

Holidays 2025: retailers face a perfect storm of traffic, threats, and customer pressure

As RAM panic grips the PC-building community, I'm putting my feet up and relaxing - here's why

As RAM panic grips the PC-building community, I'm putting my feet up and relaxing - here's why

The power and potential of agentic AI in cybersecurity

The power and potential of agentic AI in cybersecurity

The business case for sustainability: engaging with consumers to drive conscious consumerism

LATEST ARTICLES

The business case for sustainability: engaging with consumers to drive conscious consumerism

LATEST ARTICLES- 1Oracle has one last Ampere hurrah - new cloud platforms offer up to 192 custom Arm cores, and aren't OCI exclusive, unlike Graviton or Cobalt

- 2Apple Music app in ChatGPT

- 3It's about time! Microsoft finally kills off encryption cipher blamed for multiple cyberattacks - RC4 bites the dust at last

- 4This new ‘smallest’ subwoofer packs deep bass into a 10-inch cube

- 5It's not just RAM getting more expensive - the tools to make chips are set to explode in cost too, experts warn